Seal the Secret !!

Seal the Secret !!

2022-Aug-18

By:DALEEP SINGH

2020-Jul-13 03:07:05

In part 1, we saw container was allocated an ip address and OVS SDN created a service for static mapping to the pods and also created corresponding IPTable rules for pod IP and service IP to forward the traffic. In this journey, now we will move from that point onward.

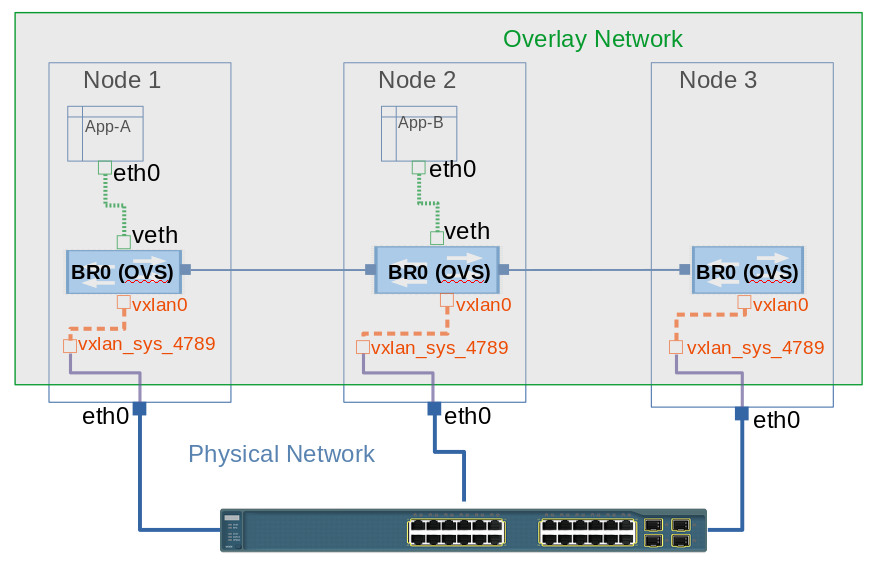

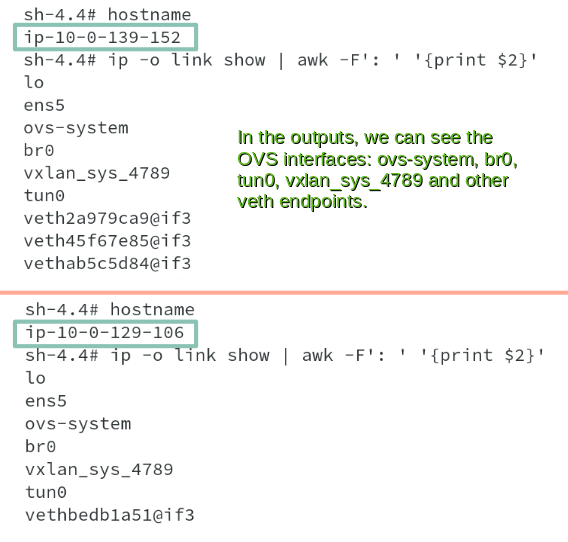

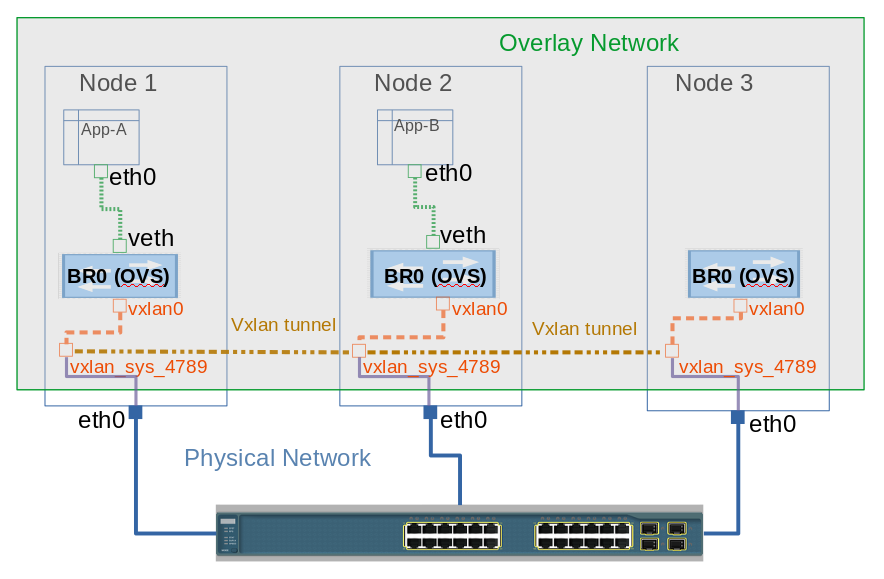

To enable a transparent and resilient network solution between the cluster nodes, OVS creates certain virtual interfaces on all cluster nodes, namely:

br0: This is the OVS bridge to which all containers are attached to using the VETH pair. This acts as central connecting point to all interfaces on the host including tun0 and vxlan0.

tun0: This is port internal to OVS and used for external network access. SDN created netfilter rules to enable access to external network using NAT.

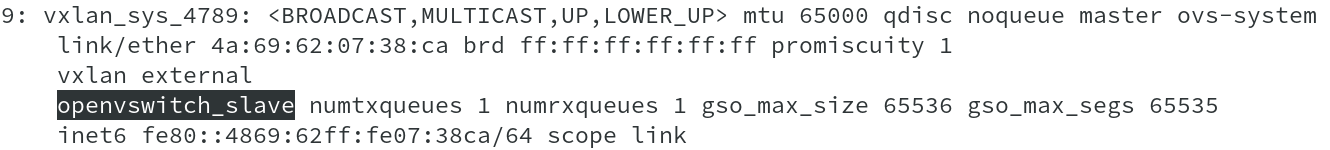

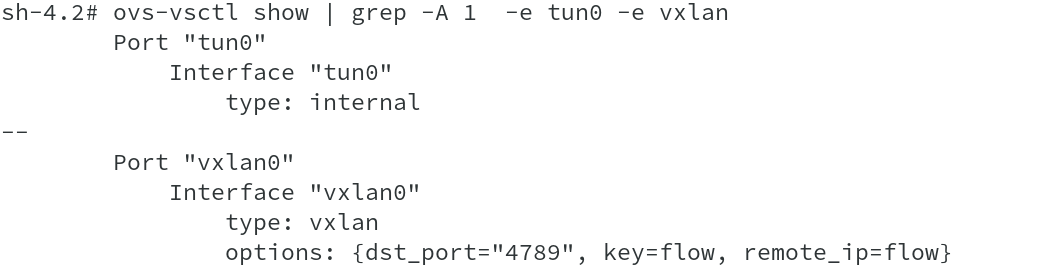

vxlan: OVS creates a vxlan device vxlan_sys_4789 which is configured in promiscuous mode. This interface is created by OVS on linux kernel itself and captures all traffic for port 4789. It also creates another interface, vxlan0, which is internal to OVS.

To understand it better, lets us follow the diagram above. The graphical representation shows that from Container App-A uses a VETH pair with one end connected to eth0 in container and the other end is connected to the Br0 port, which is an OVS bridge. Br0 forwards the traffic out using VxLAN tunnel created, vxlan0 interface being internal to OVS and vxlan_sys_4789 is external interface to host side. From there OVS flows are responsible to forward the packets to cluster nodes using VxLAN tunnel to remote host on which container App-B is deployed, which has similar infrastructure and virtual devices to receive the packets.

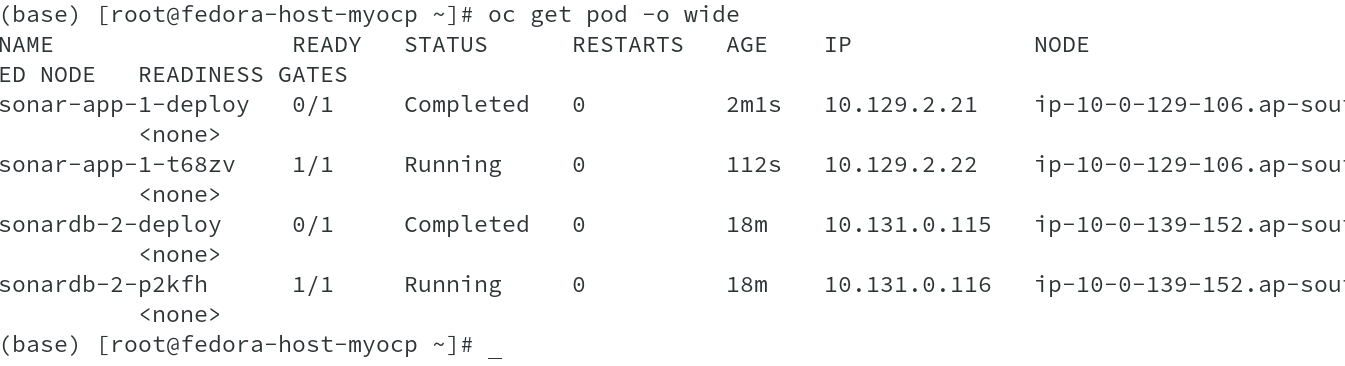

Having said all this, lets proceed to see and validate all our understanding using the Pods, we deployed as part of our earlier discussion ( refer article: Networking with OpenShift – Part-1).

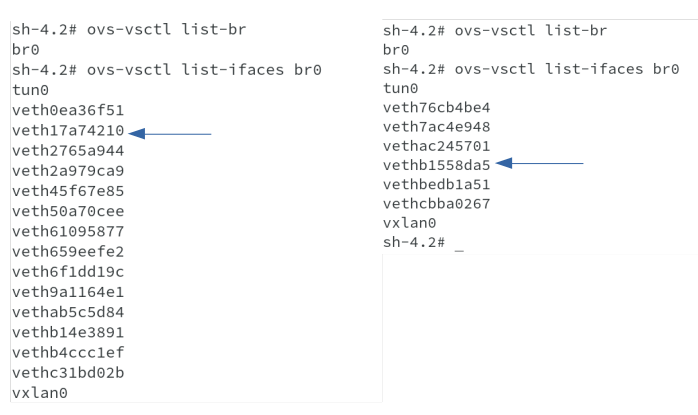

I am quickly connecting to both the nodes on which our applications pods are deployed to see the interfaces, we have discussed and the output shows all interfaces available as part of the OVS SDN. You can also see vxlan_sys_4789 on the host, which is part of vxlan traffic.

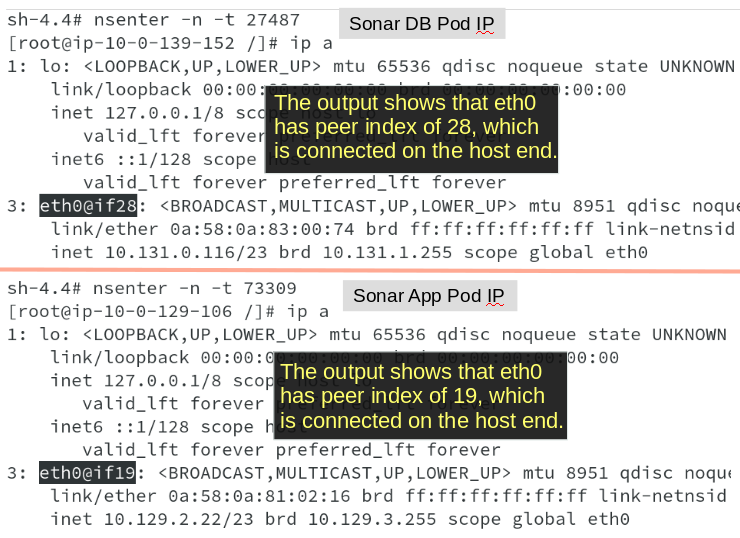

Now the important task will be to identify the interfaces corresponding to our containers connected to OVS bridge br0, as we might have so many vethxxxxx interfaces on the host from other containers as well. To get this information, using the PID, we retrieved earlier, I connect to the the network namespace of the containers and get the interface information using ip address command. The command output shows the eth0 interface on both the containers in their respective outputs ( 27487 is the PID for DB container and 73309 is the PID for App container). You are also able to see the respective hostname to confirm this.

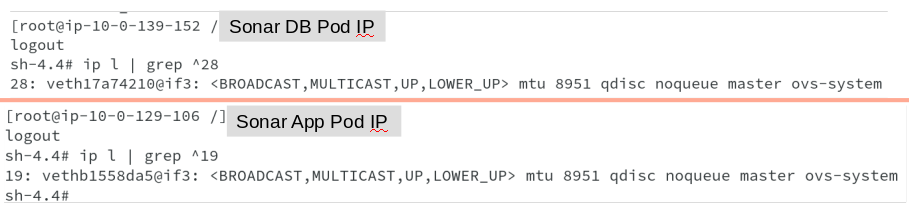

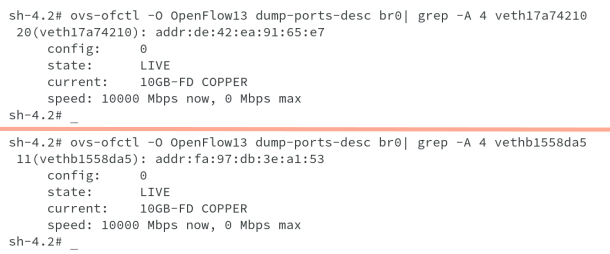

One we know the remote peer index, we can run command ip link on the hosts and look for the remote index number, as shown below. We are able to get remote peer index 28 on DB host and peer index 19 on App host. The Veth pairs respectively are veth17a74210 and vethb1558da5.

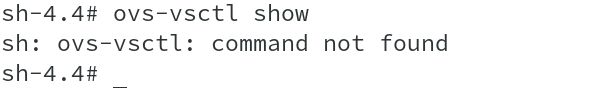

Now that we have found the veth pair, which is supposed to be connected to the OVS bridge br0, let us also check and verify that on OVS bridge. When I try to run ovs commands on host, it shows error message ‘command not found’ indicating that OVS binaries are not installed on the host. Unlike OCP3.x, where we could do arbitrary installations of packages on the host, OCP4.x is based on RHCOS, which is immutable in nature. Hence, we will not be able to perform installation of Open vSwitch package to get required OVS binaries.

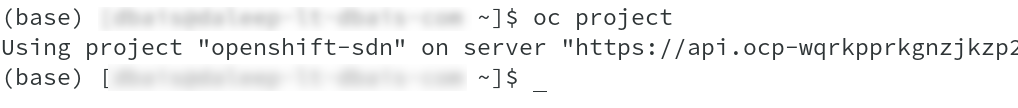

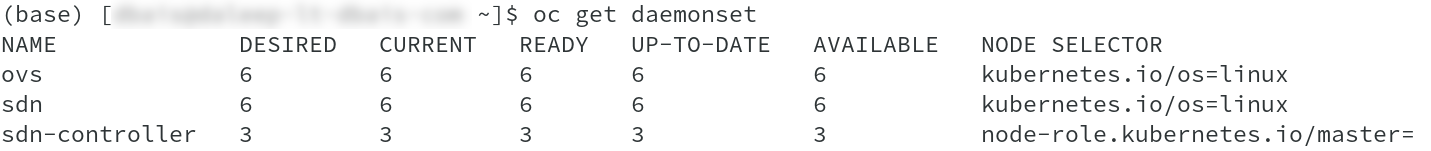

In OCP4.x, almost everything is handled using operators, leveraging the immutable nature. SDN is also taken care by SDN operator. It creates and maintains the respective pods providing the network and SDN capabilities using Daemonset resource. We move to project openshift-sdn as user with cluster-admin privileges and check the daemonset.

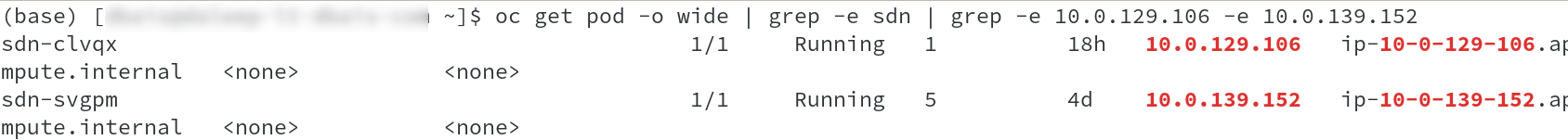

At this point, I need to get the sdn pods ip addresses running on the nodes on which our App and DB pods are deployed, so that we can connect to those pods and check for veth pairs connected to OVS for our containers.

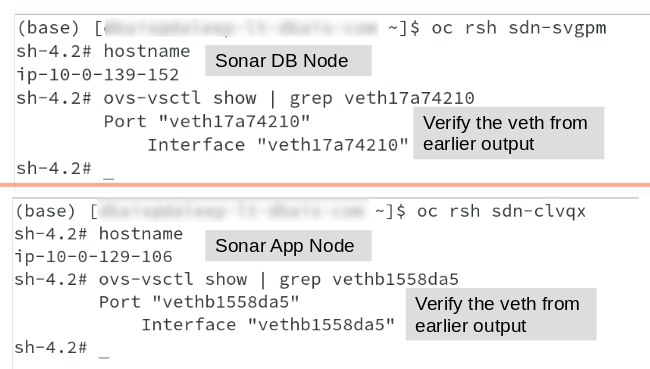

Once we do oc rsh , we are able to run ovs-vsctl show command to get the list of interfaces available on the hosts and we are able to see our vethXXXXX interfaces attached to it as well.

This ensures that we have a proper connectivity for containers to OVS bridge which now is responsible for creating flows for packets to be forwarded to respective nodes.

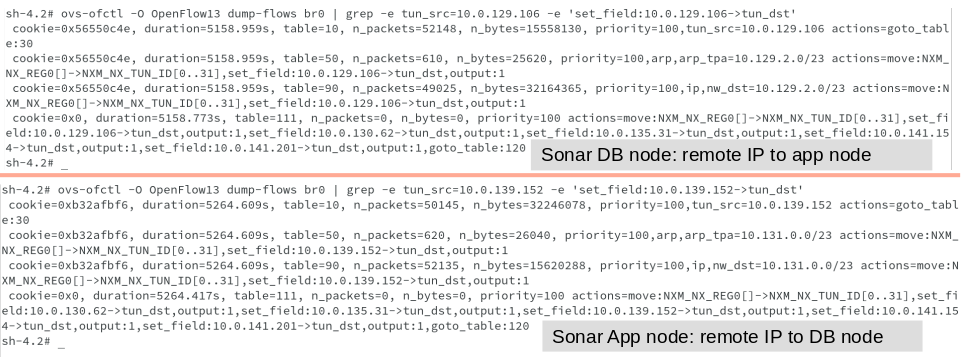

Every remote node should have a flow matching tun_src= (for incoming VXLAN traffic from that node) and another flow including the action set_field:->tun_dst (for outgoing VXLAN traffic to that node). For example:

#ovs-ofctl -O OpenFlow13 dump-flows br0 | grep -e tun_src=10.0.129.106 -e 'set_field:10.0.129.106→tun_dst'

The ovs-ofctl program is a command line tool for monitoring and administering OpenFlow switches. It can also show the current state of an OpenFlow switch, including features, configuration, and table entries.

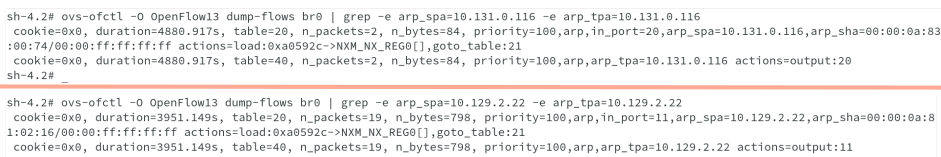

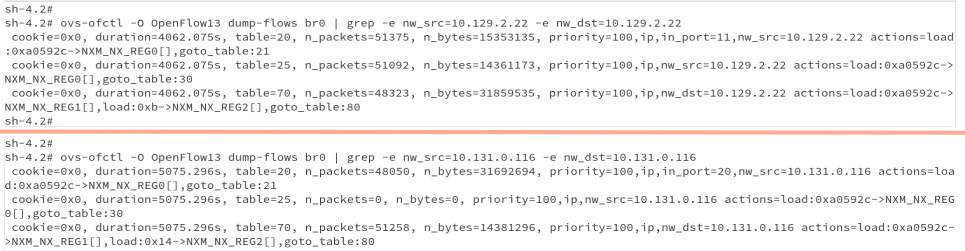

Every local pod should have flows matching arp_spa= and arp_tpa= (for incoming and outgoing ARP traffic for that pod), and flows matching nw_src= and nw_dst= (for incoming and outgoing IP traffic for that pod). For example:

#ovs-ofctl -O OpenFlow13 dump-flows br0 | grep -e arp_spa=10.131.0.116 -e arp_tpa=10.131.0.116

#ovs-ofctl -O OpenFlow13 dump-flows br0 | grep -e nw_src=10.131.0.116 -e nw_dst=10.131.0.116

From the preceding outputs, you are also able to get the port numbers, in_port for both the vethxxxx. Let us also verify the information as shown below.

We have seen enough to understand how OVS/SDN does all these automatically for us to enable us to have a virtual and clustered network solution available to us. The next output also shows all interfaces connected to the br0 bridge on both the nodes.

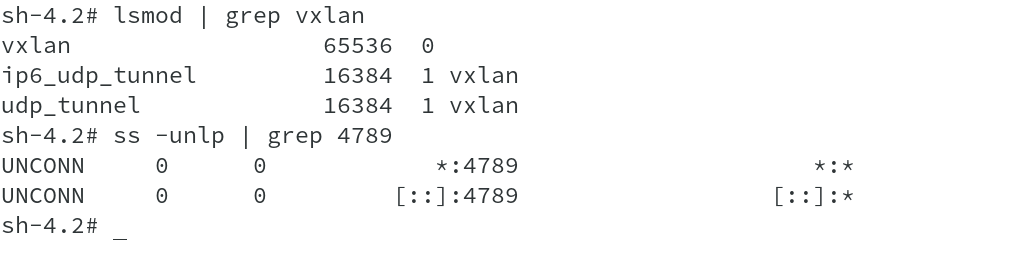

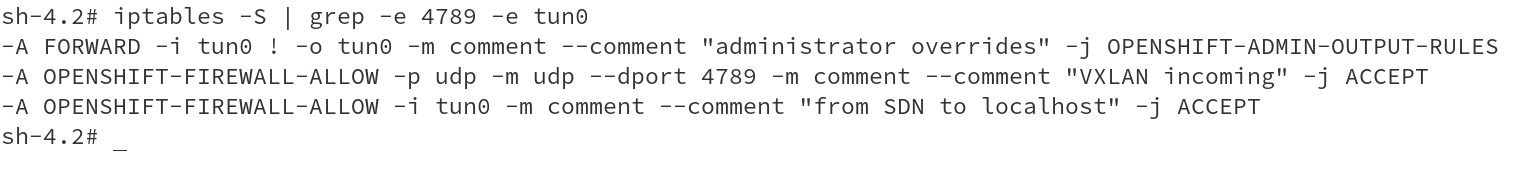

Now let us also see vxlan on the host and how it performs the forwarding for us. As discussed earlier, this interface is created by OVS directly in the Kernel and captures all the traffic (all the VNIs) on port 4789. Running a simple netstat command for UDP port 4789 shows us this information and checking for kernel modules loaded also shows vxlan being loaded.

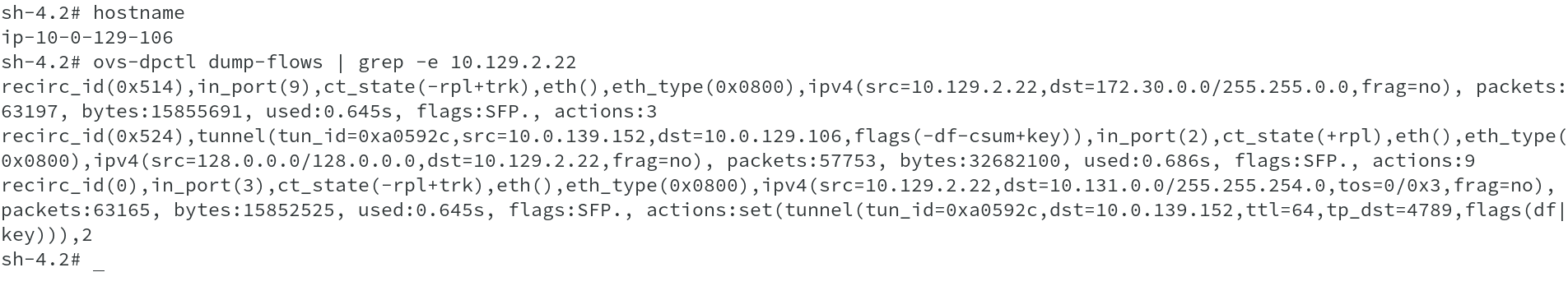

Using ovs-dpctl command, we will now dump the flows part of OVS SDN to check the vxlan tunnel information. Ovs-dpctl is a tool to create, modify and delete OVS datapaths. Datapath is a collection of virtual/physical ports exposed using OpenFlow.

From the above output src and dst in tunnel clearly indicates the initiating node for the tunnel and the terminating end node. Running iptables list for port 4789 will show the IPTable rule for vxlan incoming traffic being allowed.

Also running ip address command on the host lists vxlan_sys_4789 interface and ovs-vsctl on SDN pod shows vxlan0 interface which is internal to OVS.

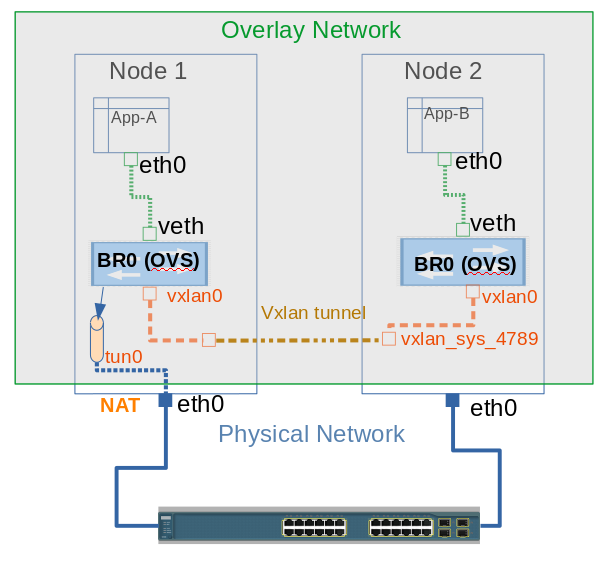

This bring us to end of our journey of the SDN and seen how OVS is using OpenFlow rules to forward application traffic with the help of IPTables / Netfilter rules created and destroyed as needed. Let me now modify the image which we saw in the beginning of this discussion and I am sure you would agree to the change and would be able to understand it.

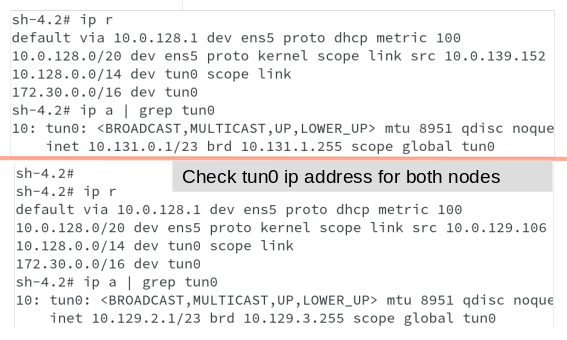

We have seen all the OVS interfaces except one, which is tun0. Tun0 is a port internal to OVS and it get the cluster subnet gateway address assigned for external access. SDN interacts with Netfilter to create routing rules for external network access through NAT.

It was great traversing across the nooks and tunnels of OVS and were able to reach our destination, thanks to OCP SDN Open vSwitch. I hope this journey was exciting and a fun adventure.

Enjoy digging in and finding new treasure !! Happy reading !!