Seal the Secret !!

Seal the Secret !!

2022-Aug-18

By:DALEEP SINGH

2020-Jul-12 09:07:50

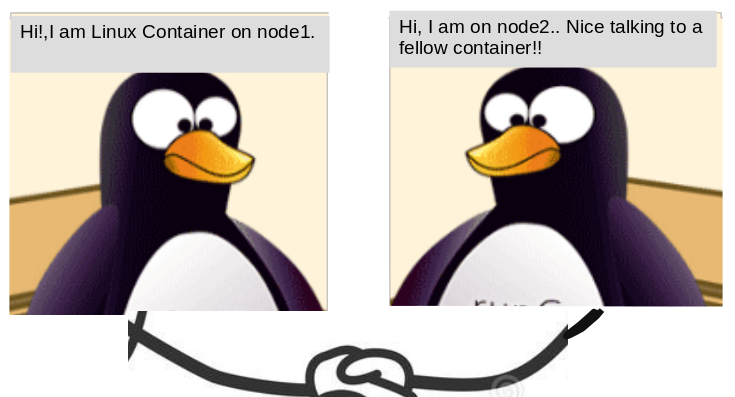

I have heard from many people how amazed they are with OpenShift networking and how easy it makes there life, however, I have also met people who wants to know how all this works under the hood. I decided to try and decipher what goes behind the scene and how exactly we get this transparent networking functionality. The entire discussion was not possible to complete in one go or one article, hence, I had to divide it in a series of articles, this being the First one.

OpenShift is able to provide network between pods from same application deployed and also provide IP address to the pods from the internal network. It uses SDN to provide the network across the cluster so that pods on different nodes can also communicate with each other and micro-services architecture based application can run on OpenShift platform leveraging the functionalities available. SDN creates an overlay network. The SDN solution used in OpenShift is Open vSwitch, more commonly known as OVS. It includes multiple plugins to configure networks and provide additional functions as per the requirement. I will give a brief on different available plug-ins later.

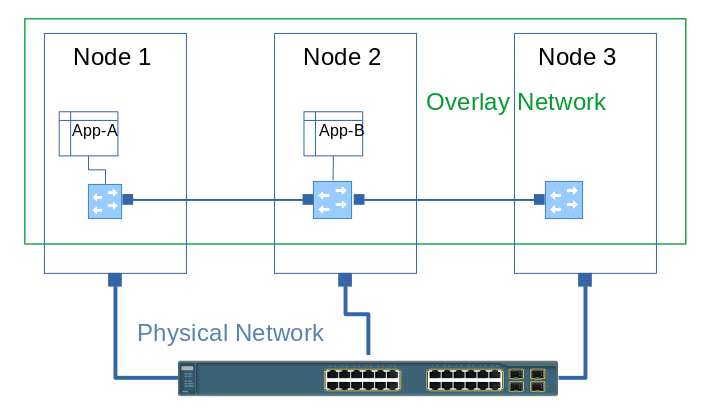

A very simple definition from Wikipedia: An overlay network is a computer network that is layered on top of another network.

Nodes in the overlay network can be thought of as being connected by virtual or logical links, each of which corresponds to a path, perhaps through many physical links, in the underlying network.

The below diagram shows that we have Node1, Node2 & Node3 connected to each other with a physical network devices. However, the applications running on the cluster are connecting to each other using virtual switching deployed and managed on overlay cluster with help of some SDN solution.

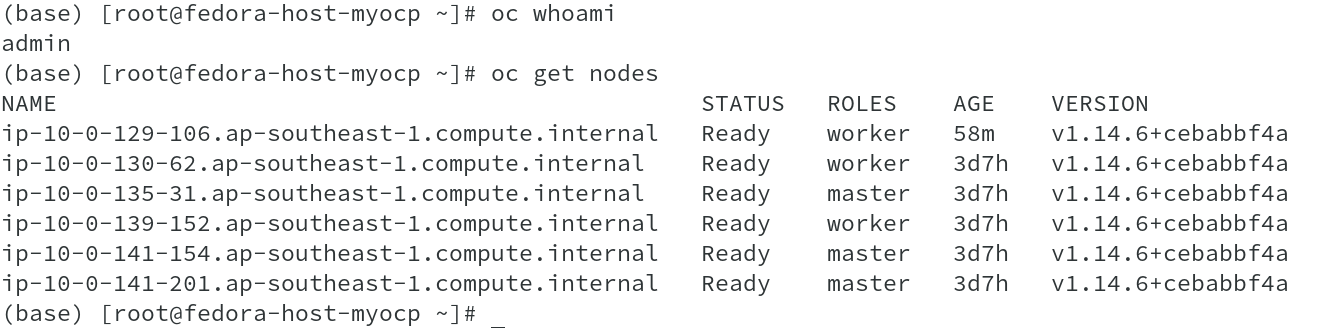

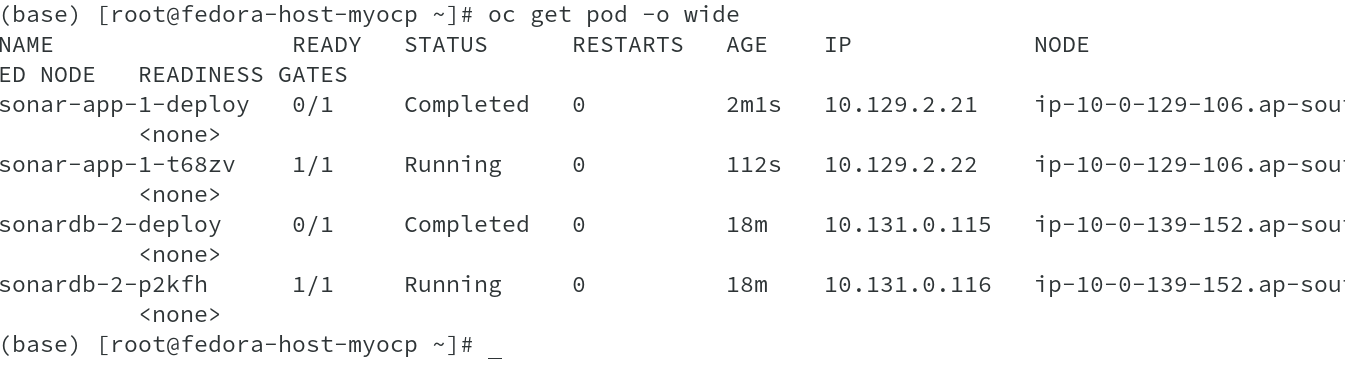

To demonstrate the networking and SDN, I used OpenShift cluster which has 3 Master and 3 Worker nodes. On the cluster, I deployed an application which has frontend and a database backend. We have seen deployment of Sonarqube on OCP in earlier article ( Deploy SonarQube on Red Hat OpenShift ).

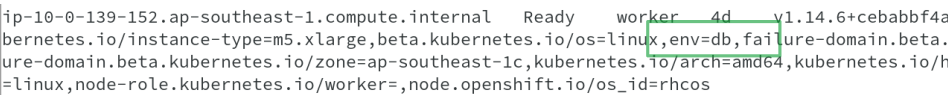

I have deployed the front end on one node and ensured that database pod doesn’t get deployed on the same node.

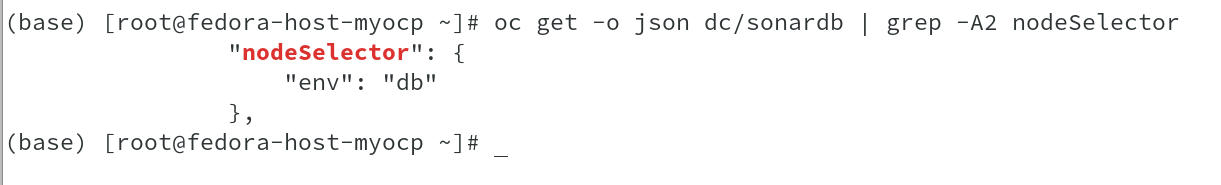

To achieve this, I used node selector to match the labels on the specific node. The key value pair in this case is env=db, which you could see in the deployment config as well as on the node.

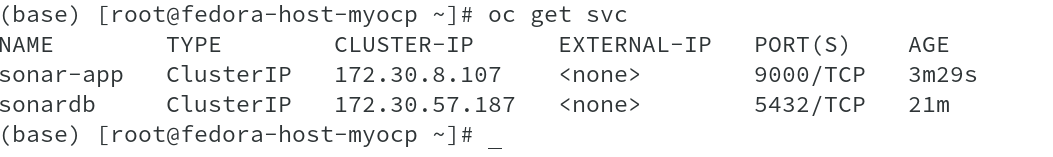

The deployment also created individual service to communicate with both the pods and also allocated static IP address from the service network.

Now that we have all resources needed for the application to run, lets get on our journey to decipher the secret to SDN with OVS.

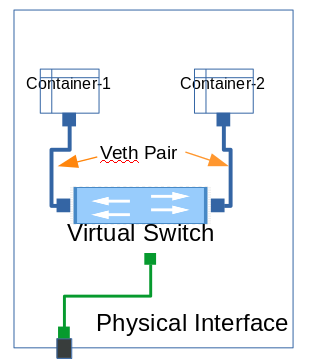

The SDN is responsible to create the network functions and devices needed for the virtual connection which includes Control plane and data plane. To connect a container to network, virtual devices like virtual interface, virtual patch cord, virtual end of the virtual patch cord ( VETH Pair ) etc have to be created and also connected to the virtual devices created ( as shown the graphical representation below). We will see that connection first from both the containers and later on, move to see the data flow between the nodes. Lets consider this as our first step in this direction.

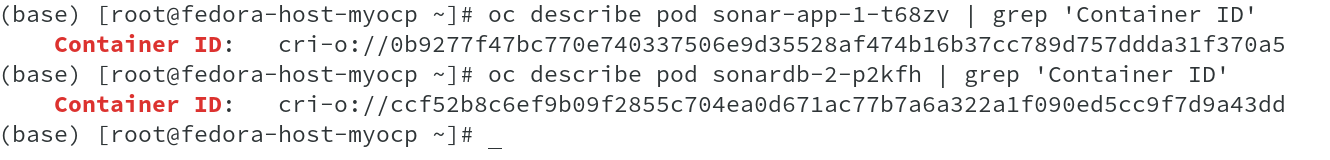

The very first action we need to perform is to get the container id for both of the containers. This is needed to identify the network interface connected to the containers, as in the remote ends of the VETH pair, in which one end would be connected to the container eth0 and other end to the virtual switch, part of the OVS SDN.

As seen from above output, I retrieved the container id’s of both the Pods, App and DB using oc describe pod <Pod-Name>. Now that we have the container id’s, the node name on which these containers are deployed ( oc get pod -o wide command ran earlier shows the node names), we will use oc debug node/<Node Name> to access the nodes and run commands.

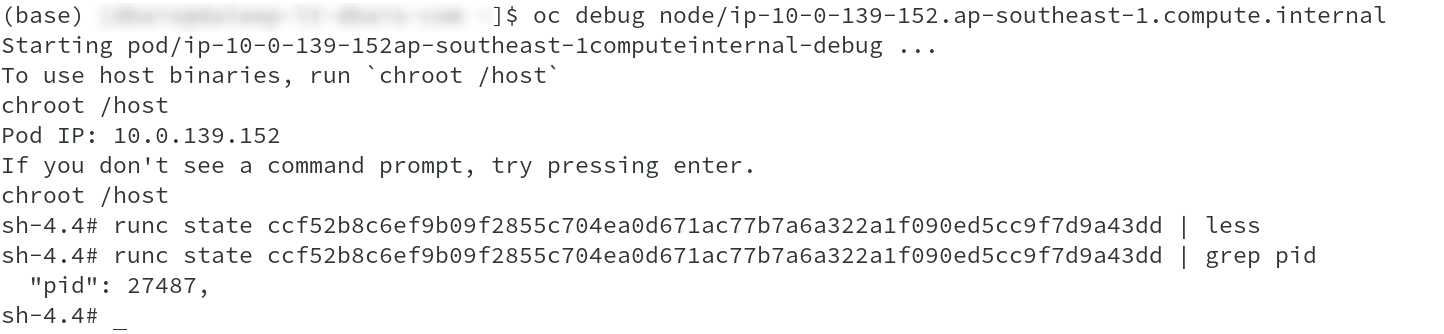

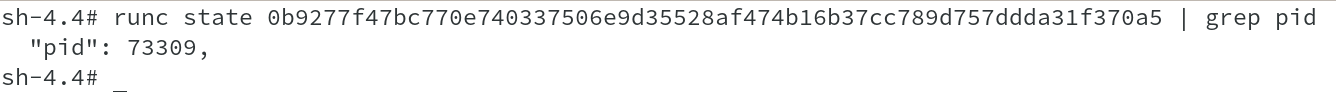

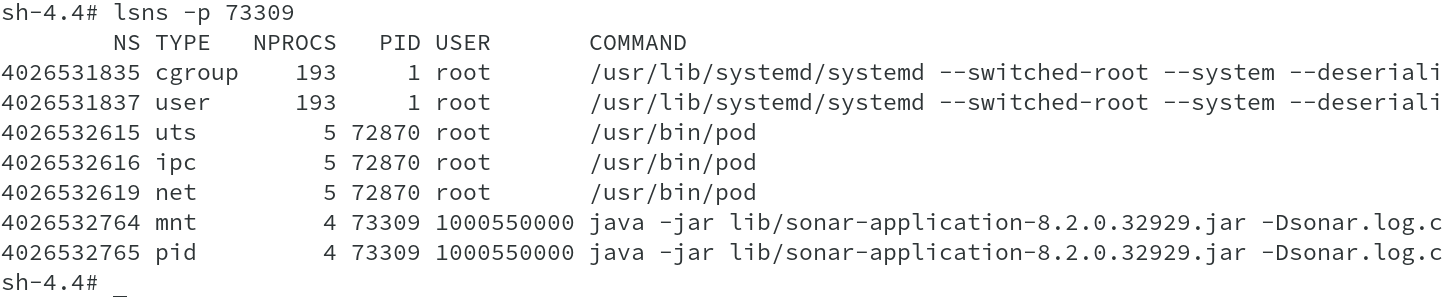

Once connected to the node, we will quickly do chroot /host ( refer to earlier article ‘Behind oc debug node’ to know more on this ) and run command runc state <Container Id> to get the PID’s associated with respective

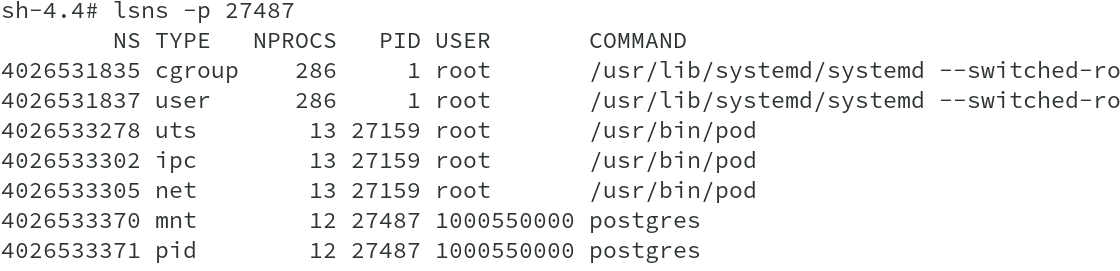

containers. Pid’s have been used to also list the namespaces associated with the containers and we can clearly see the processes running on the containers.

The output shows that this is the DB container and running postgres process running with UID of 1000550000.

We will perform similar commands on the other node, which hosts App ( frontend ) container. We have PID of 73309 for the container and checking the namespaces associated to this pid indicates that this is sonarqube application, which is what we wanted. So we are good so far, a baby step.

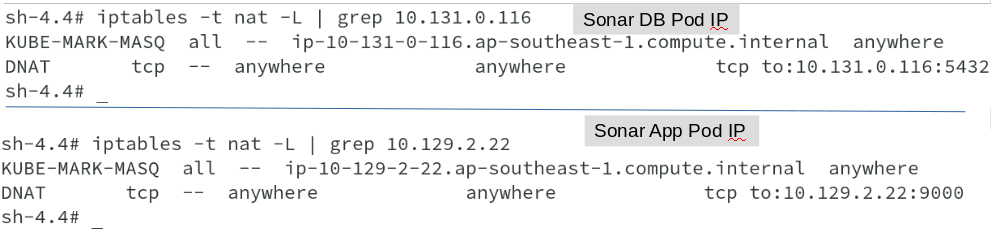

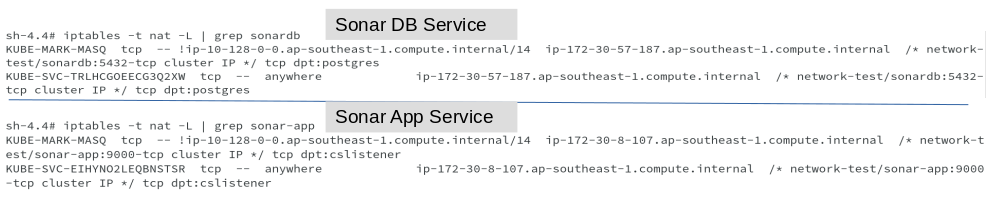

As seen earlier, OpenShift created service corresponding to each deployment and SDN, then creates IPTable rules to forward the traffic for service and Pod IP’s, as shown in below outputs.

Same iptable rules are created on all the nodes, not just the node, where the pod has been deployed.

In this part, we saw how container process is created and how SDN ensures that required iptable rules are created to enable communication. Next part, we will take a leap into OVS and understand how containers connect to SDN using the VETH pair etc.